Resume Skills and Keywords for Big Data Engineer

A Big Data Engineer is responsible for designing, implementing, and maintaining large-scale data processing systems that handle massive volumes of structured and unstructured data. They work with cutting-edge big data technologies such as Hadoop, Spark, and Kafka to develop efficient ETL processes, ensuring the seamless extraction, transformation, and loading of data. Collaborating closely with data scientists, analysts, and other stakeholders, Big Data Engineers integrate data processing capabilities into analytical models and applications. Additionally, they focus on optimising data models, fine-tuning workflows for performance, and implementing robust security measures to protect sensitive information. Their role involves continuous monitoring, maintenance, and troubleshooting of big data solutions, contributing to the creation of scalable and high-performance data-driven environments within organisations.

Skills required for a Big Data Engineer role:

- Hadoop

- Spark

- Java

- Scala

- Data Modelling

- Extract, Transform, Load

- Database Management

- Distributed Computing

- Data Warehousing

- Data Security and Compliance

- Machine Learning

- Troubleshooting

- Adaptability

- Analytical Thinking

- Strong Communication

- Leadership Skills

What recruiters look for in a Big Data Engineer resume:

- Proven experience as a Big Data Engineer or in a similar role.

- Proficiency in programming languages such as Java, Scala, or Python.

- Strong understanding of big data technologies, including Hadoop, Spark, and related ecosystems.

- Experience with data modelling, optimisation, and performance tuning.

- Knowledge of data security principles and best practices.

What can make your Big Data Engineer resume stand out:

A strong summary that demonstrates your skills, experience and background in the data science sector

- An exceptionally talented Big Data Engineer with experience in designing, putting into practice, and refining massive data processing solutions. Equipped with a wealth of knowledge in distributed computing, big data technologies, and programming languages, with expertise in creating effective data pipelines that guarantee the smooth transfer of information from many sources. Strong in data modelling, ETL procedures, and cloud platforms, thrive in building scalable systems that facilitate data-driven decision-making and smart analytics.

Targeted job description

- Establish monitoring and alerting systems to ensure the health and performance of big data solutions.

- Perform routine maintenance and troubleshooting of data processing systems.

- Design and implement data models for efficient storage and retrieval of large datasets.

- Develop robust and efficient ETL (Extract, Transform, Load) processes to ingest, process, and store large volumes of structured and unstructured data.

- Design, implement, and maintain scalable and distributed data processing architectures using big data technologies (e.g., Hadoop, Spark, Kafka).

Related academic background

- B.Tech in Computer Science at Vellore Institute of Technology, New Delhi | 2019

Sample Resume of Big Data Engineer in Text Format

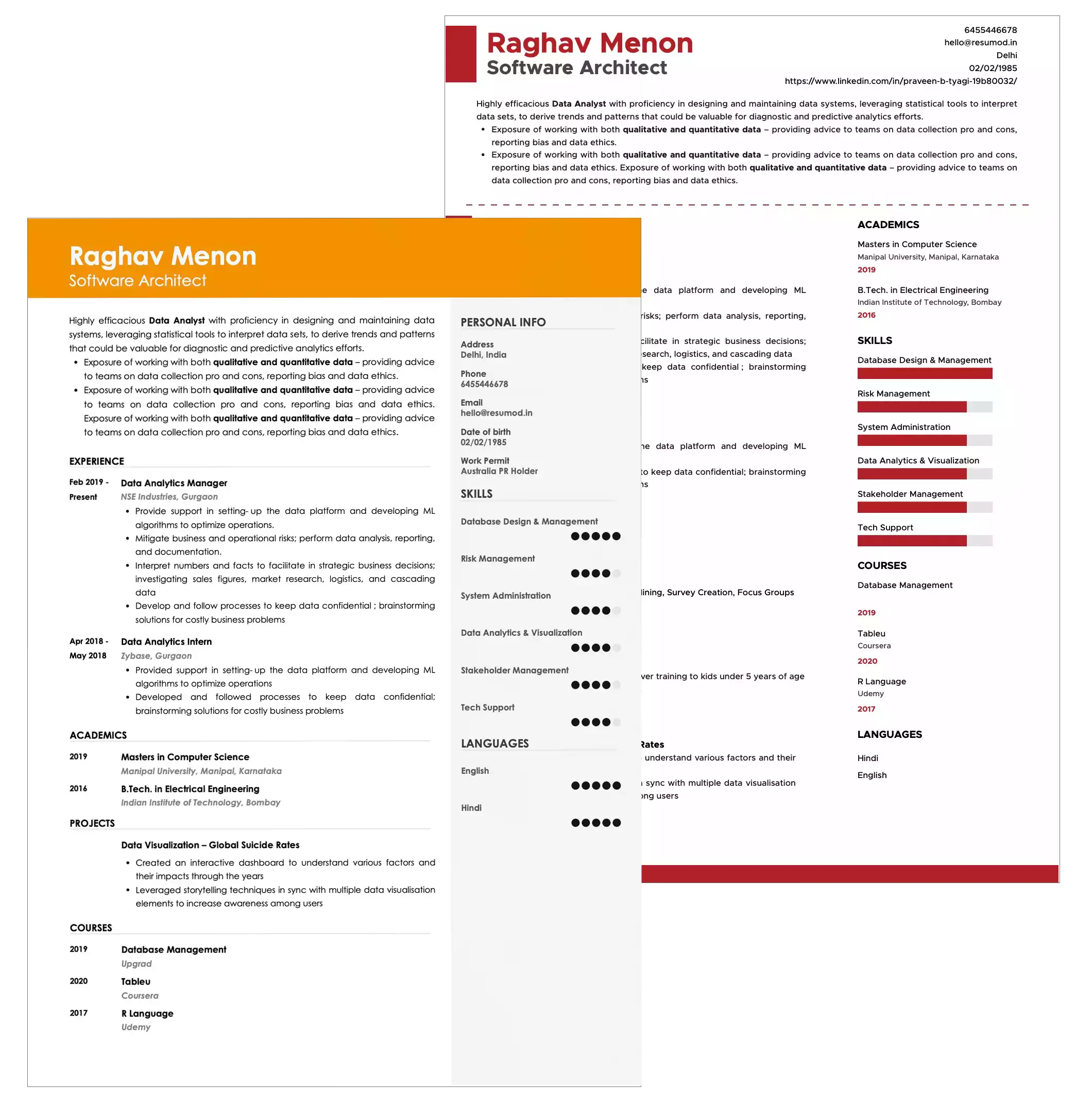

VIVEK GUPTA

Big Data Engineer

+91-9876543210 | vivek@gmail.com |New Delhi, India

SUMMARY

An exceptionally talented Big Data Engineer with experience in designing, putting into practice, and refining massive data processing solutions. Equipped with a wealth of knowledge in distributed computing, big data technologies, and programming languages, with expertise in creating effective data pipelines that guarantee the smooth transfer of information from many sources. Strong in data modelling, ETL procedures, and cloud platforms, thrive in building scalable systems that facilitate data-driven decision-making and smart analytics.

EMPLOYMENT HISTORY

Big Data Engineer at One-Tech Pvt. Ltd. from Sep 2021 - Present, New Delhi

- Designing and implementing scalable and distributed big data architectures.

- Developing efficient data ingestion pipelines from various sources into big data systems.

- Implementing batch and real-time data processing using technologies such as Apache Spark and Apache Flink.

- Designing and maintaining scalable data storage solutions, including data lakes and warehouses.

- Developing and optimising ETL processes for transforming and cleaning large datasets.

- Implementing security measures for protecting sensitive data in big data systems.

- Evaluating and selecting appropriate big data technologies and frameworks.

- Implementing monitoring solutions for tracking the health and performance of big data systems.

Big Data Engineer at Ryzen Technologies from Sep 2019 - Aug 2021, New Delhi

- Created and maintained comprehensive technical documentation for big data processes and architectures.

- Designed data structures to facilitate advanced analytics and machine learning.

- Troubleshot and resolved issues related to data processing and storage.

- Optimised big data processes for performance, scalability, and efficiency.

- Implemented and tuned distributed computing solutions.

- Communicated technical concepts effectively to both technical and non-technical stakeholders.

EDUCATION

B.Tech in Computer Science at Vellore Institute of Technology, New Delhi | 2019

SKILLS

Hadoop | Spark | Java | Scala | Data Modelling | Extract, Transform, Load | Database Management | Distributed Computing | Data Warehousing | Data Security and Compliance | Machine Learning | Troubleshooting

LANGUAGES

English

Hindi

10493

10493